The software described in this documentation is either in Extended Support or Sustaining Support. See https://www.oracle.com/us/support/library/enterprise-linux-support-policies-069172.pdf for more information.

Oracle recommends that you upgrade the software described by this documentation as soon as possible.

When Keepalived is used with two or more servers, loss of network connectivity can result in a split-brain condition, where more than one server acts as the primary server, and which can result in data corruption. To avoid this scenario, Oracle recommends that you use HAProxy in conjunction with a shoot the other node in the head (STONITH) solution such as Oracle Clusterware to support virtual IP address failover in preference to Keepalived.

Oracle Clusterware is a portable clustering software solution that enable you to configure independent servers so that they cooperate as a single cluster. The individual servers within the cluster cooperate so that they appear to be a single server to external client applications.

The following example uses Oracle Clusterware with HAProxy for load balancing to HTTPD web server instances on each cluster node. In the event that the node running HAProxy and an HTTPD instance fails, the services and their virtual IP addresses fail over to the other cluster node.

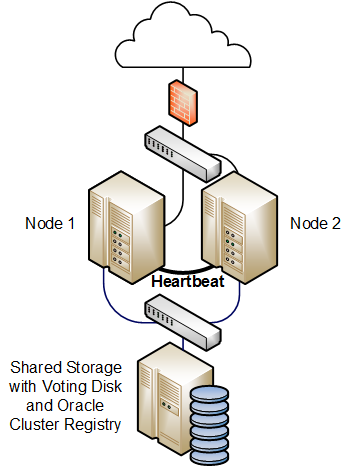

Figure 17.7 shows two cluster nodes, which are connected to an externally facing network. The nodes are also linked by a private network that is used for the cluster heartbeat. The nodes have shared access to certified SAN or NAS storage that holds the voting disk and Oracle Cluster Registry (OCR) in addition to service configuration data and application data.

For a high-availability configuration, Oracle recommends that the

network, heartbeat, and storage connections are multiply redundant

and that at least three voting disks are configured.

The following steps outline how to configure such a cluster:

Install Oracle Clusterware on each system that will serve as a cluster node.

Install the

haproxyandhttpdpackages on each node.Use the appvipcfg command to create a virtual IP address for HAProxy and a separate virtual IP address for each HTTPD service instance. For example, if there are two HTTPD service instances, you would need to create three different virtual IP addresses.

Implement cluster scripts to start, stop, clean, and check the HAProxy and HTTPD services on each node. These scripts must return 0 for success and 1 for failure.

Use the shared storage to share the configuration files, HTML files, logs, and all directories and files that the HAProxy and HTTPD services on each node require to start.

If you have an Oracle Linux Support subscription, you can use OCFS2 or ASM/ACFS with the shared storage as an alternative to NFS or other type of shared file system.

Configure each HTTPD service instance so that it binds to the correct virtual IP address. Each service instance must also have an independent set of configuration, log, and other required files, so that all of the service instances can coexist on the same server if one node fails.

Use the crsctl command to create a cluster resource for HAProxy and for each HTTPD service instance. If there are two or more HTTPD service instances, binding of these instances should initially be distributed amongst the cluster nodes. The HAProxy service can be started on either node initially.

You can use Oracle Clusterware as the basis of a more complex solution that protects a multi-tiered system consisting of front-end load balancers, web servers, database servers and other components.

For more information, see the Oracle Clusterware 11g Administration and Deployment Guide and the Oracle Clusterware 12c Administration and Deployment Guide.