Three-Node Architecture Overview

The architecture described in this chapter is deployed on the following three systems:

-

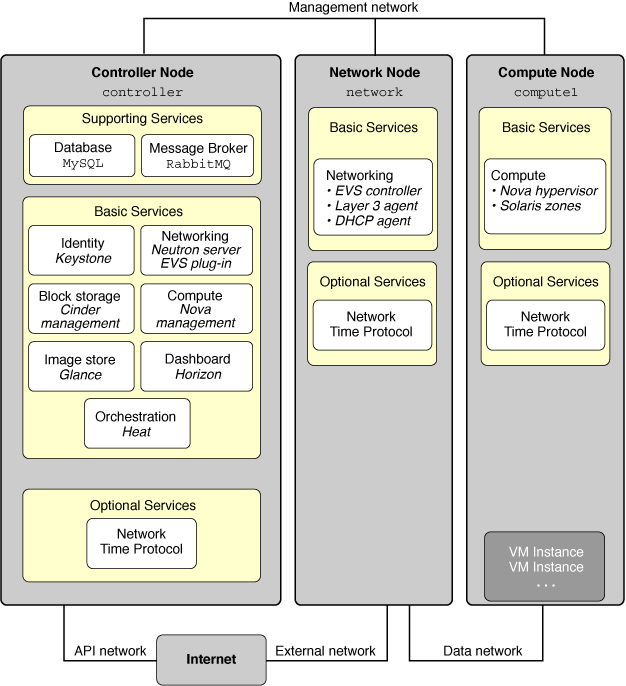

Controller node. The Controller node is where most of the shared OpenStack services and other tools run. The Controller node supplies API, scheduling, and other shared services for the cloud. The Controller node has the dashboard, the image store, and the identity service. Additionally, Nova compute management service as well as the Neutron server are also configured in this node.

-

Network node. The Network node provides virtual networking and networking services to Nova instances using the Neutron Layer 3 and DHCP network services.

-

Compute node. The Compute node is where the VM instances (Nova compute instances) are installed. The VM instances use iSCSI targets provisioned by the Cinder volume service.

In this architecture, the three nodes share a common subnet called the management subnet. The Controller node and each compute node share a separate common subnet called the data subnet. Each system is attached to the management network through its net0 physical interface. The Network node and Compute node are attached to the data network through their net1 physical interfaces.

The following figure shows a high-level view of the architecture described in this chapter.

Figure 3-1 Three-Node Configuration Reference Architecture

The following table shows which SMF services related to OpenStack are installed in each node. The list shows the smallest part of the name of each SMF service that you can use with commands such as the svcadm command. The instance name of the SMF service is listed only if the name would be ambiguous without the instance name.

|

This example architecture does not show the Swift object storage service. For general information about configuring Swift, see information on the OpenStack community site such as the OpenStack Configuration Reference. For information about how to configure Swift services on Solaris systems, and for other information about OpenStack on Solaris, see OpenStack for Oracle Solaris 11.

For a list of OpenStack configuration parameters that are useful for OpenStack deployments on Oracle Solaris systems, see “Common Configuration Parameters for OpenStack” in Getting Started with OpenStack on Oracle Solaris 11.2.

To prepare for the implementation of the sample three-node OpenStack configuration, make sure that you have the following information:

-

IP address and host name of the controller node.

-

IP address and host name of the network node.

-

IP address and host name of the compute node.

-

Passwords for the different service users as required.

For the sample configuration, the names of the three nodes are controller, network, and compute1.