Three-Node Architecture Overview

Typically, you would install and configure OpenStack across multiple systems or nodes. Single-node configurations are useful to test OpenStack as a product, and to familiarize yourself with its features. However, a single-node configuration is not suitable in a production environment.

Each cloud needs only one dashboard instance, one image store, and one identity service. Each cloud can have any number of storage and compute instances. In a production environment, these services are configured across multiple nodes. Evaluate each component with respect to your needs for a particular cloud deployment to determine whether that component should be installed on a separate node and how many of that type of node you need.

-

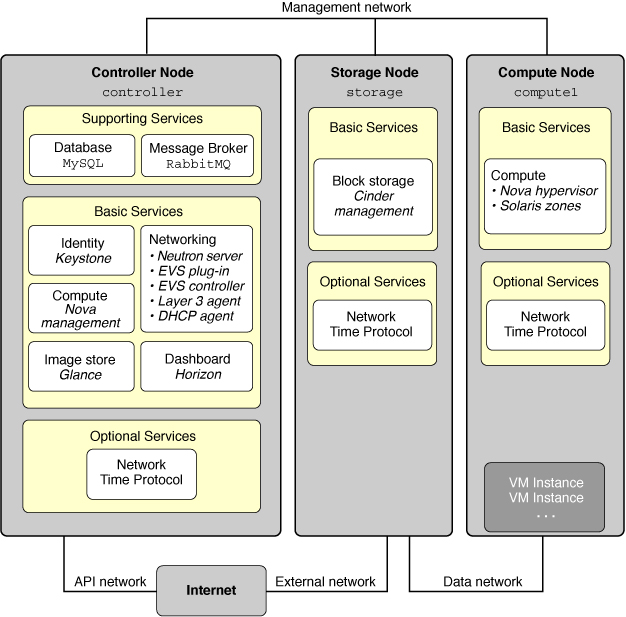

Controller node – node where most of the shared OpenStack services and other tools run. The controller node supplies API, scheduling, and other shared services for the cloud. The controller node has the dashboard, the image store, and the identity service. Additionally, Nova compute management service as well as the Neutron server are also configured in this node.

-

Compute node – node where VM instances, also known as Nova compute instances, are installed. The node runs the compute daemon that manages these VM instances.

-

Storage node – node that hosts the data.

The architecture described in this chapter is deployed on the following three systems:

Note - This documentation describes an architecture that is deployed on separate physical systems. To partition a single Oracle SPARC server and configure multi-node OpenStack on the server running OVM Server for SPARC (LDoms), see Multi-node Solaris 11.2 OpenStack on SPARC Servers. The article specifically refers to the Havana version of OpenStack. However, the general steps also apply to the current version.

The following figure shows a high-level view of the architecture described in this chapter.

Figure 4-1 Three-Node Configuration Reference Architecture

This example architecture does not show the Swift object storage service. For general information about configuring Swift, see information on the OpenStack community site such as the OpenStack Configuration Reference. For information about how to configure Swift services on Oracle Solaris systems, and for other information about OpenStack on Oracle Solaris, see OpenStack for Oracle Solaris 11.

In Oracle Solaris, the elastic virtual switch (EVS) forms the back end for OpenStack networking. EVS facilitates communication between VM instances that are either on VLANs or VXLANs. The VM instances can be on the same compute node or across multiple compute nodes. For more information about EVS, refer to Chapter 5, About Elastic Virtual Switches, in Managing Network Virtualization and Network Resources in Oracle Solaris 11.2 .

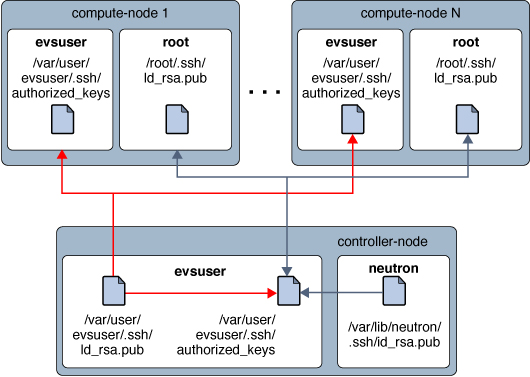

In order for the different nodes to communicate with one another, the SSH public keys of the evsuser, neutron, and root in the controller node must be in each of the evsuser's authorized_keys file in all configured compute nodes. Refer to the following image that shows the distribution of the SSH public keys. The image assumes that multiple compute nodes have been configured.

For a list of OpenStack configuration parameters that are useful for OpenStack deployments on Oracle Solaris systems, see “Common Configuration Parameters for OpenStack” in http://www.oracle.com/technetwork/articles/servers-storage-admin/getting-started-openstack-os11-2-2195380.html.

Figure 4-2 EVS Controller SSH Key Distribution